AI agents promise faster decisions and less manual work, but they’re often treated like a black box. Actions get taken, alerts get closed, and no one is fully sure why. When things go wrong, that lack of clarity becomes a problem.

This guide explains how AI agents work in operational terms. It breaks down how they observe signals, make decisions, and act across monitoring and response workflows. The focus is on behavior you’ll see in real systems, not abstract theory.

You’ll learn what separates agents from simple automation, where they add value, and where human oversight still matters. If you’re evaluating AI agents for ops or monitoring, this will help you understand what you’re actually putting in control.

Key takeaways

- AI agents are intelligent software systems that interact with their environment, make decisions, and act independently to achieve specific goals.

- They are already helping companies automate tasks, speed up workflows, and make better use of data across everyday operations.

- Traditional monitoring tools fall short because AI agents change over time and rely on many moving parts.

- Real-time monitoring is essential, since even small delays or failures can quickly impact user experience or business outcomes.

- Because agents depend on APIs, models, and plugins, you need full visibility into every link in the chain to trace issues.

- Monitoring should be a continuous process that evolves with your agents and infrastructure.

- AI agents are moving toward full autonomy, where they can act, monitor, and self-correct with minimal human oversight.

What are AI agents?

An artificial intelligence (AI) agent is a software program designed to autonomously perceive its environment, process data, and take actions to accomplish specific goals or tasks on behalf of users or other systems. It improves over time by learning from new data and past experiences to make better decisions.

Imagine it as an intelligent assistant that works independently. Handling tasks without needing constant human oversight.

How AI agents fit in today’s tech landscape

Modern workplaces are overwhelmed with too much information, too many tools, and constant context-switching. This fragmentation slows teams down and clouds decision-making. As technology continues to evolve, the impact of AI on the future of work is shaping how teams collaborate, automate, and make decisions in increasingly dynamic environments.

AI agents are emerging as a powerful solution. Unlike traditional tools, they don’t just automate tasks, they integrate across systems, coordinate actions, and make decisions in real time without constant human input.

One of their most transformative strengths lies in utilizing unstructured data. While enterprises today typically use only about 10% of their data, AI agents are making it possible to access and act on the remaining 90%, much of which is unstructured or previously inaccessible. This leads to better decisions, faster insights, and entirely new opportunities.

Another thing that sets AI agents apart is their adaptability. Unlike static scripts or rule-based automation, they learn from interactions, user behavior, and outcomes. That makes them ideal for handling complex, changing workflows with increasing accuracy and impact.

How AI agents actually work in monitoring systems

AI agents in monitoring are not autonomous fixers. They are decision engines that observe signals, apply rules or models, and take scoped actions based on predefined goals. Understanding that boundary prevents overtrust and bad implementations.

At a basic level, an AI agent follows a loop: observe, decide, act, then observe again. Observation comes from metrics, logs, events, or traces. Decision logic can be rule-based, model-driven, or a mix. Actions are usually limited to things like opening incidents, enriching alerts, adjusting thresholds, or triggering workflows.

The key difference from traditional monitoring is adaptability. Static monitoring relies on fixed thresholds. AI agents learn normal behavior over time and flag deviations. This helps in dynamic systems where traffic, load, or usage patterns change constantly and static rules either miss issues or create noise.

Most AI agents do not operate in isolation. They depend heavily on context. That includes service topology, recent deploys, historical baselines, and known dependencies. Without this context, agents surface anomalies without explaining why they matter, which slows response instead of speeding it up.

Action scope is intentionally narrow. In production systems, AI agents rarely make destructive changes. Instead, they assist humans by grouping alerts, identifying likely root causes, or suggesting next steps. Full automation without guardrails increases blast radius when the agent is wrong.

Feedback loops matter more than models. Effective AI agents improve because humans validate or reject their outputs. When teams acknowledge alerts, close incidents, or override decisions, that feedback refines future behavior. Without this loop, agents stagnate and lose relevance.

There are also hard limits. AI agents struggle with rare events, planned changes, and novel failure modes. A migration, traffic spike, or one-off job can look anomalous even when expected. This is why human awareness and change context remain essential.

Another constraint is data quality. Agents amplify what they see. Missing metrics, noisy logs, or inconsistent naming produce unreliable decisions. AI does not compensate for poor instrumentation. It exposes it faster.

In practice, AI agents work best as force multipliers. They reduce noise, surface patterns, and speed triage. They do not replace monitoring strategy or incident ownership.

The right mental model is simple: AI agents help you notice and prioritize. Humans still decide and fix.

Why AI agents are transformative for businesses

AI agents are changing how businesses operate, compete, and create value. By enabling smarter workflows and faster scaling, they empower organizations to deliver more personalized and impactful experiences. A closer look at the AI agent development process shows how these systems can be implemented to support diverse business processes. As more companies recognize this potential, the demand for specialized AI agent development services is growing rapidly to help organizations build and integrate these complex, autonomous systems.

1. Autonomy & efficiency

AI agents can operate with minimal human oversight. Once given a goal, they break it into smaller tasks, interact with tools and data, and adjust based on real-time inputs. This makes it possible for teams to scale operations without adding more staff.

From supply chain management to finance, AI agents are helping eliminate manual inefficiencies, speed up processes, and reduce errors. By offloading routine tasks, business teams can focus on strategy and high-impact work, making organizations faster and more productive.

Example: Penske uses AI agents in its Fleet Insight system to monitor and maintain over 200,000 trucks. Each truck is fitted with telematics devices that collect real-time performance data and send it to Penske headquarters.

Their proprietary AI analyzes this massive stream of data to detect early signs of maintenance issues. This proactive system helps prevent breakdowns, improves safety, and keeps trucks running smoothly.

2. Adaptive learning & decision-making

Many AI agents are built on machine learning or reinforcement learning, which means they improve over time. They learn from feedback, past interactions, or outcomes to make smarter decisions in future tasks. The ability of AI agents to adapt to new data and continuously evolve makes them invaluable.

Example: Google’s Loon project (now part of Alphabet) used reinforcement learning to keep stratospheric balloons hovering over target areas. These balloons adjust their altitude in real time by learning wind patterns, using past and live data to predict the best elevation.

As a result, they learned to “hover” accurately over hard-to-reach locations in Kenya and Peru, without direct human control.

3. Wide range of applications

AI agents are highly adaptable, making them valuable across many industries and use cases. Their flexibility allows them to handle diverse tasks such as:

- Customer support: Efficiently resolve tickets, manage live chats, and escalate issues intelligently when needed.

- Process automation: Orchestrate workflows across tools and teams.

- Business intelligence: Analyze data trends, generate insights, and recommend informed actions.

- RPA (Robotic process automation): Move beyond simple rule-based bots by using context-aware, intelligent automation for complex tasks.

Challenges in managing AI agents

AI agents are designed to make autonomous decisions on the fly and adapt to changing environments. While this flexibility makes them powerful, it also introduces a new class of management challenges.

1. Complexity & multi-stage reasoning

AI agents rarely handle tasks completely on their own. More often, they coordinate actions across multiple systems like APIs, databases, plugins, or even other agents.

Think of it like a relay race: each step passes the baton to the next. But if something goes wrong along the way, figuring out exactly where the problem started can be tough. Even a small glitch in one part can throw the entire process off course.

Let’s say you’ve built a travel-booking agent. It asks the user about dates and preferences, checks flight prices from multiple airlines, looks up available points from a rewards account, and then tries to book the ticket.

Now, imagine one of those airline APIs suddenly changes how it sends data. The agent might return wrong info or fail, and it’s not immediately clear why. To the user, it just looks broken.

So, how do you fix this?

The key is to make the agent’s steps visible. Instead of just logging the final answer, log each part of the process – what the agent asked, what each API returned, and what decisions it made along the way.

Tools like LangSmith or OpenTelemetry can help you track all this. With better visibility, it’s much easier to spot issues and keep your agents running smoothly.

2. Risk of unpredictable behavior

As AI agents interact with new data or even other agents, their behavior can change in ways you didn’t expect. Small updates to prompts, models, or data sources can lead to inconsistent or even harmful results if you’re not watching closely.

For example, a customer support agent trained on biased data might start repeating that bias in their replies. In these cases, quality control tools, such as an AI humanizer, can help ensure language stays natural and user-friendly.

To reduce this risk, it’s important to actively monitor how agents behave in the real world, not just in testing. Track changes over time, review their outputs regularly, and set up alerts for anything unusual. Use versioning so you always know which model or prompt is running. And where possible, create feedback loops so agents can learn safely from their mistakes.

3. Scalability & resource constraints

Running smart AI agents can use a lot of computing power, especially those that need quick responses, memory, or planning. When you have many agents working at the same time, demands such as GPU or TPU needs, response speed, and costs can quickly add up and become a real challenge.

For example, a large company deploying hundreds of AI agents across teams might see its cloud bills spike if each agent is running heavy computations all the time.

To handle this, it’s important to optimize how your agents use resources. This might mean running only critical agents on powerful hardware, while lighter models handle simpler tasks. You can also batch requests or use caching to reduce repeated work.

The importance of AI monitoring

As AI agents grow more autonomous and become deeply integrated into critical workflows, proper oversight becomes non-negotiable. Without it, agents can fail silently, make costly mistakes, or gradually lose effectiveness.

Why monitoring is essential

AI agents are dynamic systems that constantly interact with live data, APIs, and users. Because of this, things can sometimes go unexpectedly wrong. Without proper monitoring, issues like data errors, service outages, or model drift can slip by unnoticed, leading to incorrect decisions, slow responses, or even system crashes.

For example, imagine a customer support AI that relies on an external payment API. If that API changes without warning or goes down, the agent might start giving wrong payment statuses or fail to process refunds. Without monitoring, the team might only discover the problem after customers complain, damaging trust and increasing support costs.

AI monitoring helps by:

- Ensuring reliability: Keeping the agent stable despite changing data or system updates.

- Detecting failures early: Spotting errors before they impact users.

- Maintaining performance: Tracking if the AI’s accuracy or speed drops over time.

Just like any employee needs oversight, AI agents need constant visibility to work safely and effectively.

Key metrics & alerts

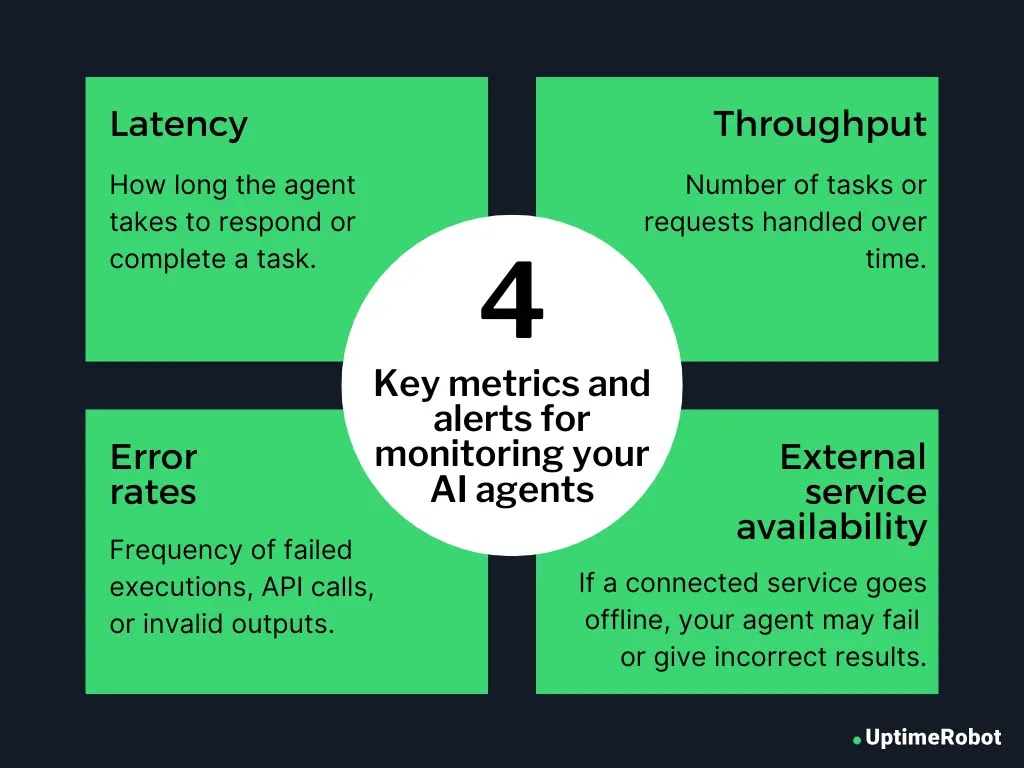

To keep your AI agents running smoothly, you need to track a few key metrics:

- Latency: How long the agent takes to respond or complete a task.

- Throughput: Number of tasks or requests handled over time.

- Error rates: Frequency of failed executions, API calls, or invalid outputs.

- External service availability: If a connected service (like an API or plugin) goes offline, your agent could break or give wrong results.

Set up smart alerts on these metrics so you’re notified right away if something looks off. That way, you can catch problems early, before they affect users or cause bigger issues.

Observability & debugging

When your AI agent runs into problems, you need to understand why. That’s where observability tools come in handy:

- Logs: Keep detailed records of what your agent sees, says, and does step-by-step. This helps you trace exactly where things went wrong.

- Traces: Use visual maps to follow how a request moves through different parts of your system. It shows you where delays or errors happen.

- Metrics: Track performance data over time to identify trends and catch sudden changes early.

Special considerations for monitoring AI agents

Monitoring AI agents requires more than just traditional logging and metrics. Because these systems are dynamic and made up of multiple interacting components, they evolve and behave differently from typical software. Here’s what to keep in mind when monitoring AI agents.

1. Real-time vs. batch analysis

Batch analysis, which collects data first and reviews it later, is fine for slower, less urgent systems. But AI agents work in real time, so batch analysis is too slow and can miss important problems as they occur.

Even short delays or downtime can hurt user experience and business outcomes. That’s why your monitoring needs fast alerts and automatic fixes – like fallback responses or rerouting – to keep things running smoothly.

2. Chained services & APIs

AI agents usually depend on a chain of services like language models, databases, APIs, and internal tools. If one link in that chain fails, it can cause problems throughout the whole system.

So, monitoring needs to trace the entire request path end-to-end. You should be able to pinpoint exactly which part is causing issues.

Adaptive or evolving models

AI agents often learn and change over time by updating their models, prompts, or plugins. Because of this, you need to keep track of which version is being used at all times. Treat these updates like software code, with version control and the ability to roll back if needed.

It’s also important to check the agent’s behavior regularly to catch any problems early. Without proper version tracking, it’s nearly impossible to tell if a problem comes from new agent behavior or a backend update.

Example use cases

Let’s explore some real-world scenarios where AI agents make a big impact.

1. Customer support chatbot

AI-powered agents in customer support go far beyond answering basic questions. They help users reset passwords, track orders, resolve payment issues, and even suggest personalized services 24/7, at scale. But their role doesn’t stop there.

These agents also track important signals like user satisfaction, response times, and how often they fall back on generic answers when they don’t understand a request. Monitoring these metrics helps teams spot friction points early and improve how the agent responds.

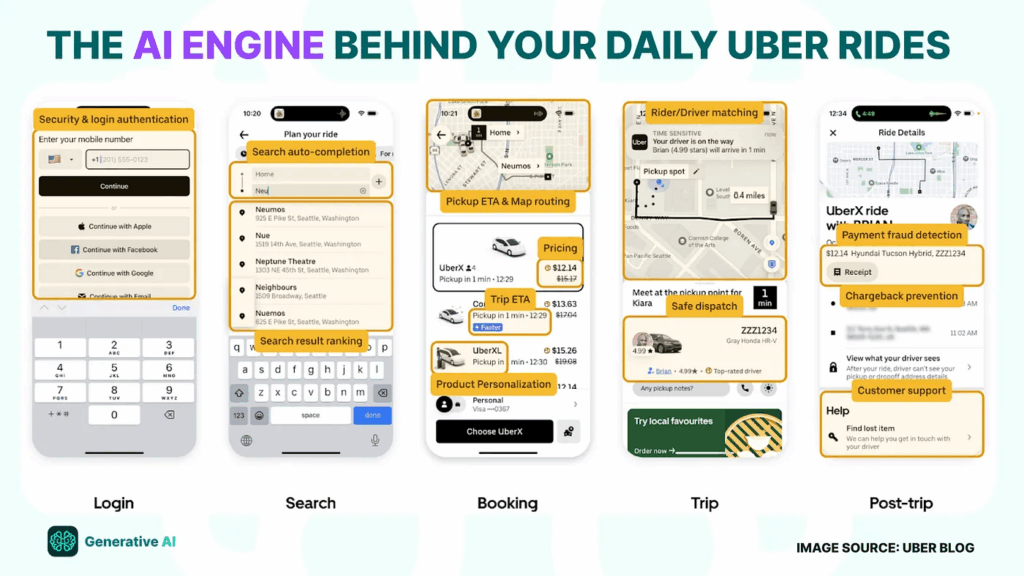

Real-world example: Uber

Uber leverages AI agents to generate conversational summaries, automate support investigations, provide empathetic and context-aware responses, and translate complex policies into simple, actionable steps for resolution.

These agents are trained to understand user intent and emotions, enabling faster and more human-like interactions across millions of support cases.

2. Autonomous data processing agent

Some AI agents work quietly behind the scenes, powering data workflows without user interaction. They automatically gather raw data, clean it, transform it, and load it into the right systems for analysis or reporting. Often running on schedules or continuously in the background, these agents handle tasks that would typically require teams of data engineers.

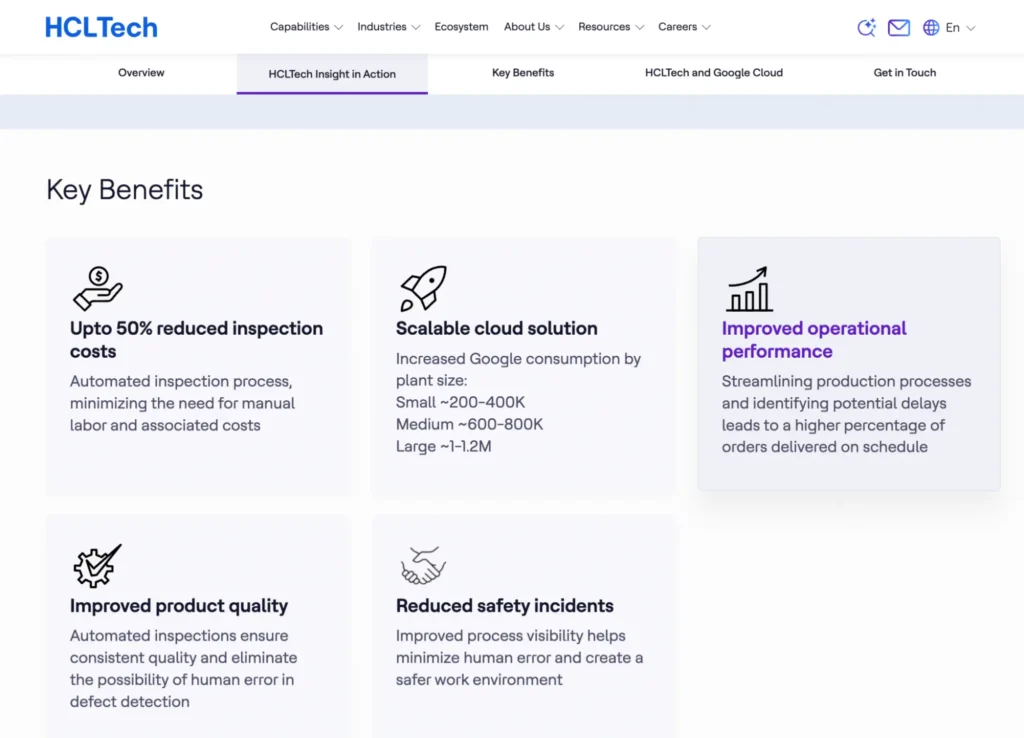

Real-world example: HCL

HCLTech’s Insight platform uses AI agents in manufacturing to analyze real-time data from sensors and production lines. These agents predict and help eliminate defects, improving product quality and boosting operational efficiency by catching problems before they escalate.

3. Multi-agent workflow orchestration

In more complex systems, AI agents often work together like a team, each handling a different part of a larger task. For example, one agent might pull in data, another analyzes it, and a third sends alerts or takes action based on the results. This kind of collaboration helps tackle complicated workflows that no single agent could manage alone.

Real-world example:

IBM’s Watson Orchestrate has transformed its customer support by automating workflows across more than 80 enterprise apps like Oracle, Salesforce, and Workday. Using prebuilt agents, IBM handles common tasks such as password resets and account inquiries, reducing response times by 30% and improving case resolution rates by 25%. This frees human agents to focus on more complex issues, boosting overall customer satisfaction.

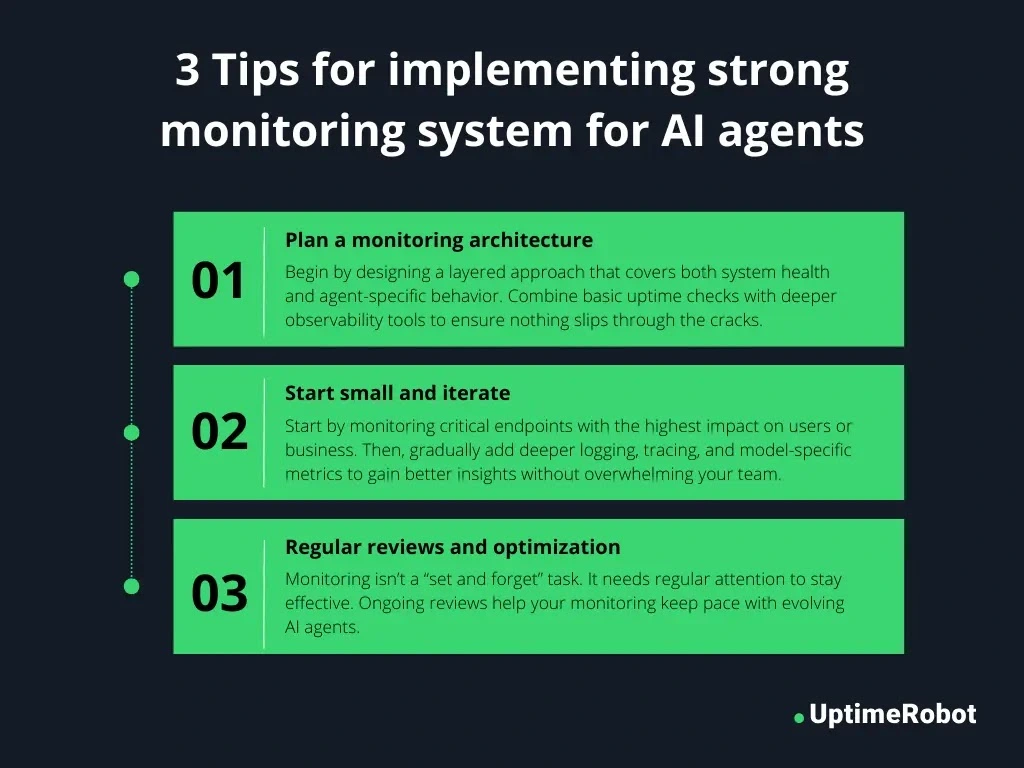

Implementation tips

Setting up a strong monitoring system for AI agents may seem complex at first, but with a clear strategy, it’s both manageable and scalable. The key is to start simple, focus on what matters most, and build up from there. Let’s look at a few practical tips:

1. Plan a monitoring architecture

Start by designing a layered approach that covers both system health and agent-specific behavior. Combine basic uptime checks with deeper observability tools to ensure nothing slips through the cracks. For example:

- Baseline monitoring: Use services like UptimeRobot, Pingdom, or StatusCake to continuously check if your public endpoints or core services are up and reachable. These tools are great for catching external outages or major failures.

- Deep observability: For internal monitoring, integrate platforms like Prometheus and Grafana for time-series metrics, or Datadog, New Relic, and Elastic for full-stack visibility. These help you monitor CPU usage, memory, request volumes, latency, and errors across services your agent depends on.

- Agent-specific debugging: Use AI-native observability platforms such as LangSmith, Arize AI, or Truera to track prompt performance, output accuracy, token usage, and agent reasoning steps. These tools give insight into how the agent is making decisions and where things may be going off track.

This layered architecture gives you both a high-level view of system uptime and a granular look at how the AI agent is performing under the hood.

For foundational monitoring like uptime checks, ping, port, keyword, and others, UptimeRobot helps ensure your core services stay reliable.

2. Start small and iterate

Begin by monitoring the most critical endpoints or workflows, those where failures would directly affect users or key business functions. Prioritize areas with the highest risk or visibility.

Once that’s in place:

- Add deeper logging of inputs, outputs, and decision paths to better understand agent behavior.

- Implement tracing to follow how requests flow across APIs, models, and internal services.

- Introduce model-specific metrics like response latency, confidence scores, and fallback rates to detect subtle degradation.

This incremental approach helps you gain essential visibility early on, without overloading your team or systems. Over time, you can expand your monitoring stack thoughtfully, based on what matters most.

3. Regular reviews and optimization

Remember, monitoring isn’t a “set and forget” task. It requires your consistent attention to remain effective. Establish a routine to:

- Review alert thresholds to minimize noise and avoid alert fatigue.

- Analyze usage patterns and performance trends to catch gradual changes or emerging issues.

- Refine alerting rules so you’re only notified about truly actionable events.

- Audit agent and model version logs alongside performance data to spot regressions or changes in behavior.

Ongoing review ensures your monitoring adapts as your AI agents evolve.

Conclusion and future outlook

AI agents are constantly advancing. As AI and machine learning progress, these agents will take on larger roles in areas like software development and security. But with greater capabilities comes greater responsibility. AI Monitoring must keep up to ensure agents stay reliable, secure, and effective.

Sustaining AI agents over time

This becomes even more important as agents operate in dynamic environments. Changes to models, data sources, APIs, or business logic can all affect how they behave. To keep them dependable, monitoring must evolve alongside these changes.

Track model drift. Measure decision quality. Catch failures early. Use version control for models, prompts, and plugins to trace when updates lead to unexpected behavior.

Without regular oversight, AI agents can quietly lose accuracy or break down. This leads to poor outcomes and reduced trust. Long-term success depends on treating monitoring as an ongoing process that grows with your systems and goals.

The next frontier: Fully autonomous systems

Soon, AI agents will move towards full autonomy, where they can operate with little to no human oversight. These systems are already beginning to reshape industries by combining decision-making, monitoring, and self-governance into one seamless loop.

For example, Tesla leads the autonomous vehicle market with $6.35 billion in revenue and plans to launch robotaxis for an AI-driven ride-sharing network, potentially changing how we think about car ownership. In retail, stores like Amazon Go are expanding across the U.S., enabling customers to shop and pay without checkout lines.

As these fully autonomous systems mature, they will redefine how we live, work, and interact with the world.

Whether you’re already using AI agents or just starting to explore them, one thing is clear: deploying them is only half the job. To ensure they stay effective, efficient, and aligned with your goals, you need to monitor them continuously. Invest in the right monitoring tools, set up robust logging and tracing, and build a strategy that keeps your agents reliable for the long haul.

Use UptimeRobot to establish a solid foundation for monitoring your core systems.

FAQ's

-

An AI agent is a software program that can make decisions and act on its own to complete tasks, based on input from its environment and data it processes.

-

Unlike basic chatbots or rule-based automation, AI agents can learn, adapt, and make complex decisions without human input. They handle dynamic workflows and improve over time.

-

AI agents are used in customer support, data analysis, fraud detection, order processing, IT operations, software development, proactive supply chain management and multi-agent workflow automation across industries like retail, finance, logistics, and healthcare.

-

Because AI agents operate independently and may evolve over time, monitoring ensures they remain accurate, responsive, and secure. Without monitoring, agents may silently fail or behave unpredictably.

-

Yes. Changes to APIs, models, data, or prompts can cause failures or unpredictable behavior. Continuous monitoring helps detect and resolve these issues early.

-

Use lightweight models for basic tasks, implement caching, batch requests, and monitor GPU/TPU usage to optimize performance and control cloud costs.

-

Model drift happens when an AI model’s performance degrades over time due to changes in data patterns. It can cause agents to make less accurate decisions and should be tracked regularly.

-

Yes, if properly trained and monitored. Companies like Uber and Amazon use AI agents in customer support, but they also implement oversight systems to ensure accuracy and empathy in responses.

-

AI agents are moving toward full autonomy, where they can act, learn, and self-monitor with minimal human oversight. This will transform industries like transportation, e-commerce, and operations.